Master Large Models! Deploy with Replicate in One Click

Replicate is a cloud-based machine learning model running platform. It allows users to run machine learning models directly using cloud APIs without needing to understand the complex internal structure of machine learning models.

Replicate allows users to run models in Python or Jupyter Notebook, and deploy and optimize models in the cloud. You can use it to run open-source models published by others, or package and publish your own models. With Replicate, you only need one line of code to generate images, run and optimize open-source models, and deploy custom models. By calling Replicate’s API in Python code, you can run models on Replicate and obtain model prediction results.

How Model Prediction Works

Whenever you run a model, you are creating a model prediction. Model prediction is the process of using an established model to predict new data. In model prediction, we use a trained model to predict the results of unknown data. This process can be completed by inputting new data into the model and obtaining the model’s output.

Some models run very quickly and can return results in milliseconds. Other models take longer to run, especially generative models, such as models that generate images based on text prompts.

For these longer-running models, you need to poll the API to check the prediction status. A model prediction can be in any of the following states:

- - Starting: The prediction is starting. If this state lasts more than a few seconds, it’s usually because a new thread is being started to run the prediction.

- - Processing: The model’s predict() method is running.

- - Success: The prediction completed successfully.

- - Failed: The prediction encountered an error during processing.

- - Canceled: The user canceled the prediction.

After logging in, you can view the prediction list on the dashboard, which includes status, running time, and other summaries:

| ID | Model | Source | Status | Run Time | Created |

|---|---|---|---|---|---|

| w42y27yw4… | zeke/haiku-standard | Web | Canceled | 0 milliseconds | 10 seconds ago |

| t54jo2as… | replicate/hello-world | Web | Success | 5 milliseconds | 1 minute ago |

| f1ekghefm… | kuprel/min-dalle | API | Success | 15 seconds | 2 minutes ago |

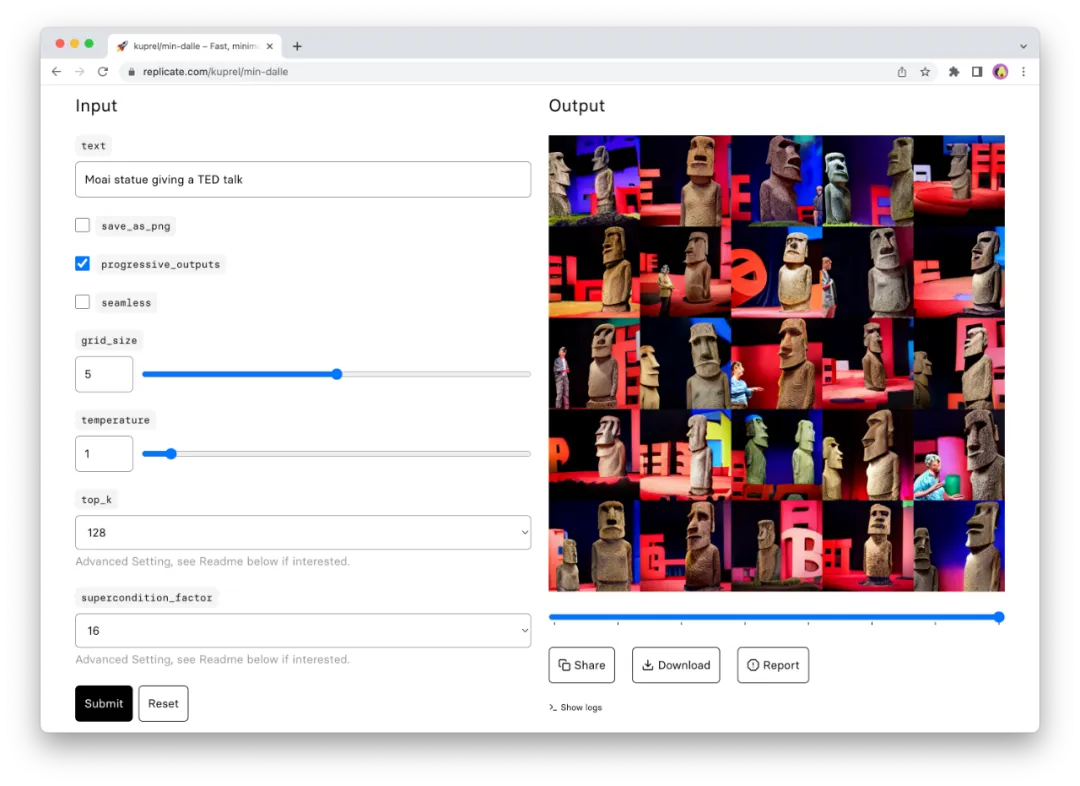

How to Run Models in Browser

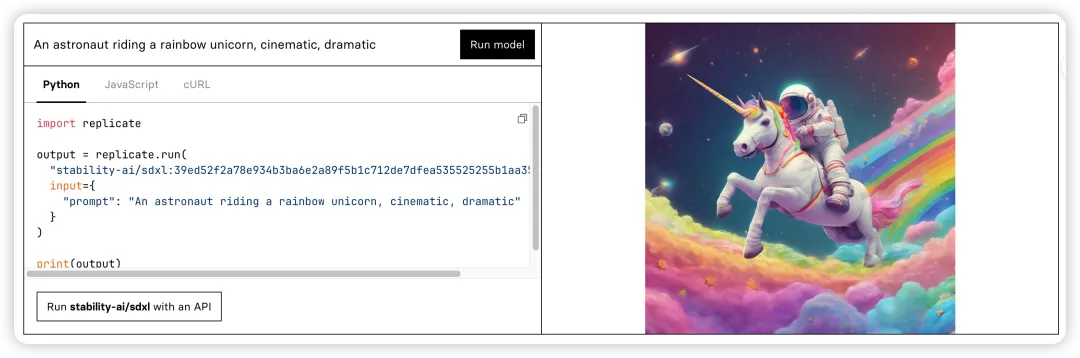

You can run models on Replicate using the cloud API or web browser. The web page allows you to visually see all inputs of the model and generates a form that can run directly from the browser, as shown below:

How to Use API to Run Models

The web page is great for understanding models, but when you’re ready to deploy models to chatbots, websites, or mobile applications, the API comes into play.

Replicate’s HTTP API can be used with any programming language, and there are client libraries for Python, JavaScript, and other languages to make using the API more convenient.

Using the Python client, you can create model predictions in just a few lines of code. First, install the Python library:

pip install replicate

Set up authentication via environment variables:

export REPLICATE_API_TOKEN=<paste-your-token-here>

Then you can run any open-source model on Replicate through Python code. The following example runs stability-ai/stable-diffusion:

import replicate output = replicate.run( "stability-ai/sdxl:39ed52f2a78e934b83b6ae2a89f5b1c712de7dfea535525255b1aa35c5565e0", input={"prompt": "An astronaut riding a rainbow unicorn, cinematic, dramatic"} ) print(output)